Boston Dynamics Atlas Video

Some notes on what's going on in "Atlas Goes Hands On" and how it compares

Boston Dynamics posted a really cool new video called Atlas Goes Hands On showing off autonomous behavior of their electric Atlas robot moving engine covers between containers. This is a cool video that shows high-precision manipulation over a long horizon.

According to Boston Dynamics:

- The robot receives as input a list of bin locations to move parts between.

- Atlas uses a machine learning vision model to detect and localize the environment fixtures and individual bins [0:36].

- The robot uses a specialized grasping policy and continuously estimates the state of manipulated objects to achieve the task. There are no prescribed or teleoperated movements; all motions are generated autonomously online.

- The robot is able to detect and react to changes in the environment (e.g., moving fixtures) and action failures (e.g., failure to insert the cover, tripping, environment collisions [1:24]) using a combination of vision, force, and proprioceptive sensors.

The video is a great example of how a traditional robotics stack can create really impressive real-world behaviors using perception. Boston Dynamics videos in the past have not done this kind of perception-based reasoning, so its interesting to discuss what they’re actually doing here.

Grasping

The robot walks over and grabs the engine cover. At 0:41 we are treated to a visualization of what’s going on.

The inset image is from their perception system.

It looks to me like they have multiple specialized object detectors running here: finding bins and segmenting out the object that we care about (the blue tinted engine cover here).

Placement

When moving to placement, you can see them taking advantage of the distinctly non-humanoid behaviors that the Atlas is capable of: it can rotate 360 degrees, so it’s much easier to plan motions to get to particular joint states. This is something I think would be harder to teach via imitation learning if you wanted to go that way (they don’t seem to!). Humans don’t move this way, but a robot can.

Interesting to note that here it only uses one hand. This means that it needs a really firm grasp on the object to keep it steady; it’s not how a human would perform this task at all, in my opinion.

Maybe they’re still not able to do two hand manipulation yet? Or if so, it’s not reliable enough to do multiple times in a row?

We see the same thing here. Corners are reliably detected and tracked, as is the object. It looks like they’re overlaying a 3D model of the engine cover.

Comparison

This is a very different result from similar demos by 1x and Figure, which show pick-and-place manipulation but are fully end-to-end. One of the big debates in robotics right now is, which of these approaches will scale better to real-world problems?

The boring answer which is almost certainly correct is, “some mixture of the two extremes,” but that’s vague and not really worth discussing right now.

The advantages of what Boston Dynamics is doing are:

Repeatability in a particular environment (as evidenced by the number of repeated trials in this video)

Incredibly high precision - the insertion task is probably beyond the demostrated capabilities of the e2e humanoids. But how long will it remain that way?

Better able to take advantage of the unique hardware - features like the full rotation on all the robot joints would require different techniques and may be hard to teach since humans can’t do this. You could still go full e2e via reinforcement learning here though.

Teaching relative keypoints to known objects (approach this mesh from this direction to grasp the engine cover) is very easy and can be done quickly.

Disadvantages:

How well does it scale? If you need custom object detectors (corners, etc), this might be a lot of trouble.

Requires known object models - true in many but not all factories and logistics settings. A company like Amazon sees a truly bewildering range of differnet objects.

These kinds of systems often require a lot of human effort to deploy in new environments, and have lots of hyperparameters to tune.

Conclusions

I'm glad people are trying different strategies, and hybrid strategies (Tesla seems to be in this camp to me). As always Boston Dynamics knows how to put on a really damned good demo. Their Spot robots are probably the most immediately-usable mobile manipulator on the market, so it’s not like they don’t have a track record of “shipping” something.

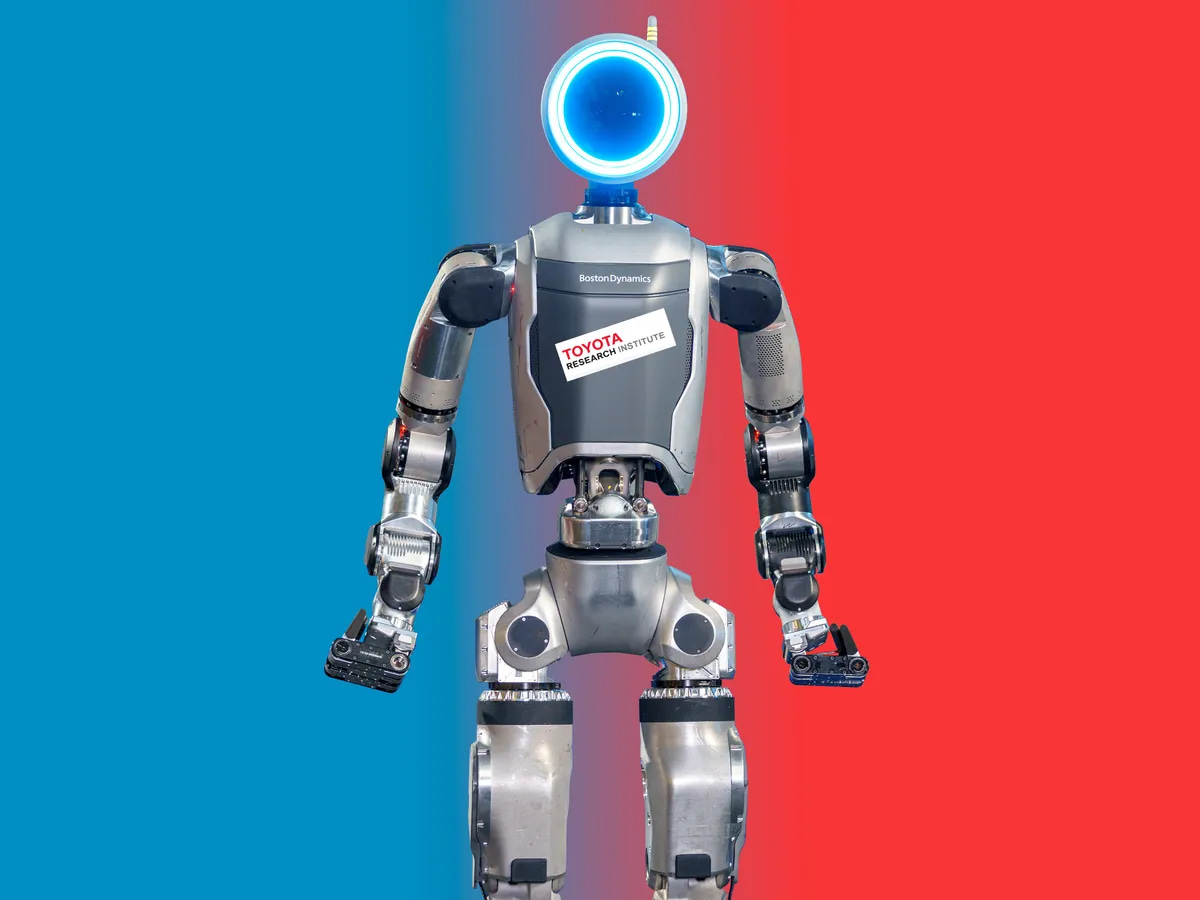

In addition, their recent partnership with Toyota Research Institute suggests that they are looking at more learning and AI. I expect that we’ll see future demos with a stronger learning component.

But the real challenges for making robots useful are all about generalization, something we can almost never see from these demos. Building amazing demos has been possible in robotics for a very, very long time, so don’t read too much into any particular video like this one.

If you liked this you can subscribe for more random posts like this one. If you didn’t like it, you can also subscribe so as to comment angrily on my posts.