Paper Notes: Points2Plans

"Strong generalization to unseen long-horizon tasks in the real world"

Long horizon reasoning is fascinating, and how we can enable long-horizon reasoning is probably the motivating drive for why a lot of people — myself included — are so interested in robotics and AI. Today I’m going through an interesting paper doing some simple long horizon reasoning for robots: Points2Plans [1].

We occasionally see truly impressive examples of AI doing long-horizon reasoning - AlphaGo wowed the world back in 2016 when it defeated world-champion Go player Lee Sedol. More recently, we see OpenAI releasing its new o1-series of models for advanced reasoning.

The goal of long horizon reasoning is to perform some previously unseen, multi-step task which involves repeatedly taking actions and interacting with the world.

We rarely see this kind of stuff in robotics. The closest is probably from a field we call Task and Motion Planning (or Integrated Task and Motion Planning ,or just TAMP) [2], which deserves a longer post because it’s a fascinating sub-area.

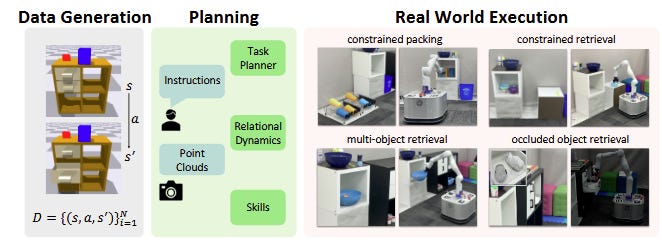

The Method

First, we assume:

Object segmentation and classification (e.g. from Detic, OWLv2, etc.)

Point cloud observations of the scene

A library of action/skill primitives (pick, place, etc.) which let us interact with the world, based on the segmented point clouds from the above

The goal is then to compute a plan (a sequence of action primitives, associated with observed objects) which maximizes the probability of accomplishing some goal.

You should immediately note this is very different from, e.g., the OpenVLA model of the world, where actions are actual robot positions. Actions are API calls. Here, an action is something like “pick(object 3).” This is more like Say-Can, the paper which kicked off the whole LLMs-in-robotics revolution.

This lets us phrase the problem as an ML-enabled version of classic symbolic planning: generate a sequence of actions, score them to see if they are any good, and then deploy these skills on the robot.

Learning a Latent Symbolic Dynamics Model

To assess the quality of a potential plan, we need either an explicit or implicit model of the effects of the robot’s actions.

Many works — e.g. Code As Policies [3] — will only do this implicitly: they’ll generate a whole action plan (as code, in that case), without thinking through what the effects of the actions will be on the world. Others like SORNet [4] predict these symbols explicitly, i.e. the model will take in an image and output the exact symbols that are expected, allowing for iterated symbolic planning. This is closer to what we see in Points2Plan, though the authors actually learn a latent dynamics model instead.

Part of the problem with symbolic planning is that predicting the effects of various symbolic actions in a continuous, messy world can be very difficult - as can grounding the symbols in the first place.

Fortunately, the proposed approach solves both problems:

By training on sim data to predict a set of predicates (something that’s worked well in prior work), this paper solves the grounding problem

Then, they also learn the dynamics model, so that we can determine the affects of our actions and decide which plan to follow.

So, the planner can be thought of as optimizing this equation from the paper:

Where we have:

q1 representing the probability of choosing actions (e.g. from an LLM)

q2 representing probability of choosing skill arguments (like which object to manipulate)

Optimizing for the likelihood the goal is satisfied.

Results

The paper shows higher success rates on their tasks, and importantly shows some generalization to unseen, long horizon tasks. Using search +learning helps that learning generalize outside of the training distribution!

Using LLMs to guide sampling was important for planning speed and correctness, which is interesting, and to me implies that there’s something wrong with the predicate prediction model - probably sim-to-real transfer issues - because plan correctness should be guaranteed by the rest of the method.

Correction: I misread the paper here. According to the authors, it doesn’t directly impact correctness of the resulting plans, but dramatically helped with speed, as the LLM actually never output an invalid plan.

Where do we go from here?

I liked this work, and I think it’s a really nice solution to the problem described. I glossed over a lot of details (including most of the actual method) [1] so you should check out the paper for those if you are interested.

One big limitation is that this is open-loop; it does not re-think steps or detect errors. I’m also curious how well, e.g., GPT-4o works at handling predicate classification, and how well LLM-based approaches work on their own.

References

[1] Huang, Y., Agia, C., Wu, J., Hermans, T., & Bohg, J. (2024). Points2Plans: From Point Clouds to Long-Horizon Plans with Composable Relational Dynamics. arXiv preprint arXiv:2408.14769.

[2] Garrett, C. R., Chitnis, R., Holladay, R., Kim, B., Silver, T., Kaelbling, L. P., & Lozano-Pérez, T. (2021). Integrated task and motion planning. Annual review of control, robotics, and autonomous systems, 4(1), 265-293.

[3] Liang, J., Huang, W., Xia, F., Xu, P., Hausman, K., Ichter, B., ... & Zeng, A. (2023, May). Code as policies: Language model programs for embodied control. In 2023 IEEE International Conference on Robotics and Automation (ICRA) (pp. 9493-9500). IEEE.

[4] Yuan, W., Paxton, C., Desingh, K., & Fox, D. (2022, January). Sornet: Spatial object-centric representations for sequential manipulation. In Conference on Robot Learning (pp. 148-157). PMLR.

Thanks for the write up Chris!

I just wanted to note that in terms of the symbolic planning the LLM didn't help with correctness, it did help significantly with speed. More critically it is what enabled translation from natural language to predicates. We could always use the graph search based planner from our previous work, once given a logical goal, but the robot never needed to!

That's because what we did find is the LLM never gave us logically inconsistent plans. We can verify any generated plan against simple mutual exclusion rules on the predicates to make sure it never created conflicting states like `on(A,B) & on(B,A)`. We could always fall back to the graph search to find the skill sequence if this failed.

I think this is also a place for interesting improvement, where we could look at more complex logical implications as a form of verification. Further, we could do more informed searches to repair issues with the LLM plan instead of simply switching to a completely separate, uninformed, search algorithm.

And we agree on the need for feedback, hopefully we'll have an update on that in a few months. :)