Giving Robots a Sense of Touch

Why tactile sensors currently aren't widely used in robotics, and why that might change soon

While we humans are, largely, visual creatures, we can’t solely rely on our eyes to perform tasks. This is a contrast to most modern robotics AI, for which the best practice is to have tasks set up so that at every moment, the information necessary to perform the task is always visually available.

Humans, obviously, do not need this. We have myriad other senses to fall back upon: our sense of proprioception, the long “context window” constituting our memory and internal world model, and, of course, our sense of touch.

Touch, though, is something that’s often not touched on in robotics research. Take the deeply impressive pi-0.5 Vision-Language-Action model, or VLA, as an example. It’s perhaps the quintessential VLA, having been trained by the team that popularized VLAs in the first place, and showing unmatched performance in a bunch of tasks and previously-unseen home environments. It also uses just proprioception and onboard cameras.

Recently, though, as mentioned in my ICRA 2025 writeup, there has been a renewed wave of interest in these technologies. So I’d like to talk a little about why we don’t see so many people using tactile sensing, and about what people are doing to bring the sense of touch to robots.

Why Don’t We See More Tactile Sensors?

There have been a large number of tactile sensors over the years - the Digit sensors from Meta, the Biotac from SynTouch, etc. Historically, these fit into the large set of robotic technologies that just didn’t work that well — not because of anything wrong with the sensors themselves, which were often very good, but because they were expensive, and it was hard to do anything interesting with them.

Even when you could do interesting stuff with these sensors, it was often easier not to use the properties of those sensors in any interesting way: see Dextreme by NVIDIA, which has Syntouch Biotacs mounted on the hand, but isn’t actually using any kind of tactile feedback!

Why? Because, again, they’re too hard to use. Sensors like the BioTac predict forces at different positions, sure, but they are often difficult to model in simulation, or even to learn a dynamics model of from data. There’s actually quite a bit of work just on interpreting the signals coming out of the Biotac.

Others are similar. Digit, for example, used the deformation of its skin to determine tactile forces, contact points, and geometry:

This can be impressively powerful, and produces quite a lot of information that a policy could potentially use. A recent version of Digit, Digit 360, claims to:

resolve spatial features as small as 7 um, sense normal and shear forces with a resolution of 1.01 mN and 1.27 mN, respectively, perceive vibrations up to 10 kHz, sense heat, and even sense odor

Which is an impressive range. The problem is that in order to use all of these amazing features, we actually need to be making it into contact with objects… which means, in most circumstances, that we need a camera. And vision-based robot learning is very good, fairly well understood, scales very well (and can be pretrained on the web!), and, most importantly, its sensors are reliable and dirt cheap.

With vision models, we have robust pretrained backbones and architectures like ViT. Tactile sensors are varied; there’s no standard tactile sensor, if that would even make sense. It would be hard to collect a huge dataset of tactile data to even build a tactile foundation model, since the sensors are so expensive, and as I’ve covered before the most important aspect of robotics scaling is diversity.

What are the data scaling laws for imitation learning in robotics?

There are a lot of expensive parts to running an AI-in-robotics company. Data is expensive and time-consuming to collect. Compute is expensive. Infrastructure needs to be build out. To make big investments in learning, we want to understand the payoff.

If the sensors aren’t widely used by different teams, and can’t be deployed in a wide range of environments to collect varied task data, then there’s little hope that we will find them as useful as vision-based models.

Another related problem is that tactile data doesn’t benefit much from imitation learning. When you teleoperate a robot, you might have haptic feedback — indicating forces exerted on the arms — but you will almost never have tactile feedback, and it’s honestly somewhat unclear how to even relay this tactile feedback to a teleoperator.

The fact that the expert demonstrators don’t use tactile sensing to perform the task means that, even if the robot has tactile sensors, it probably won’t learn to leverage them very effectively. The tactile signal simply won’t be correlated with task performance!

So, until recently, we haven’t had so many ways to benefit from tactile sensors. Fortunately, we’ve seen two big pushes in the community which might change that:

New sensors which might make it easier to scale broadly, letting many different teams use tactile sensors for their problems.

New data collection techniques which collect more useful tactile data at scale

Improving Tactile Sensors

Tactile sensors, historically, have been very expensive, and of variable quality. Sensors like the BioTac and DIGIT mentioned above are in the hundreds of dollars, are closed source, and come in very specific shapes which make them hard to employ in practice.

But there’s a new wave of open-source innovation changing tactile sensors.

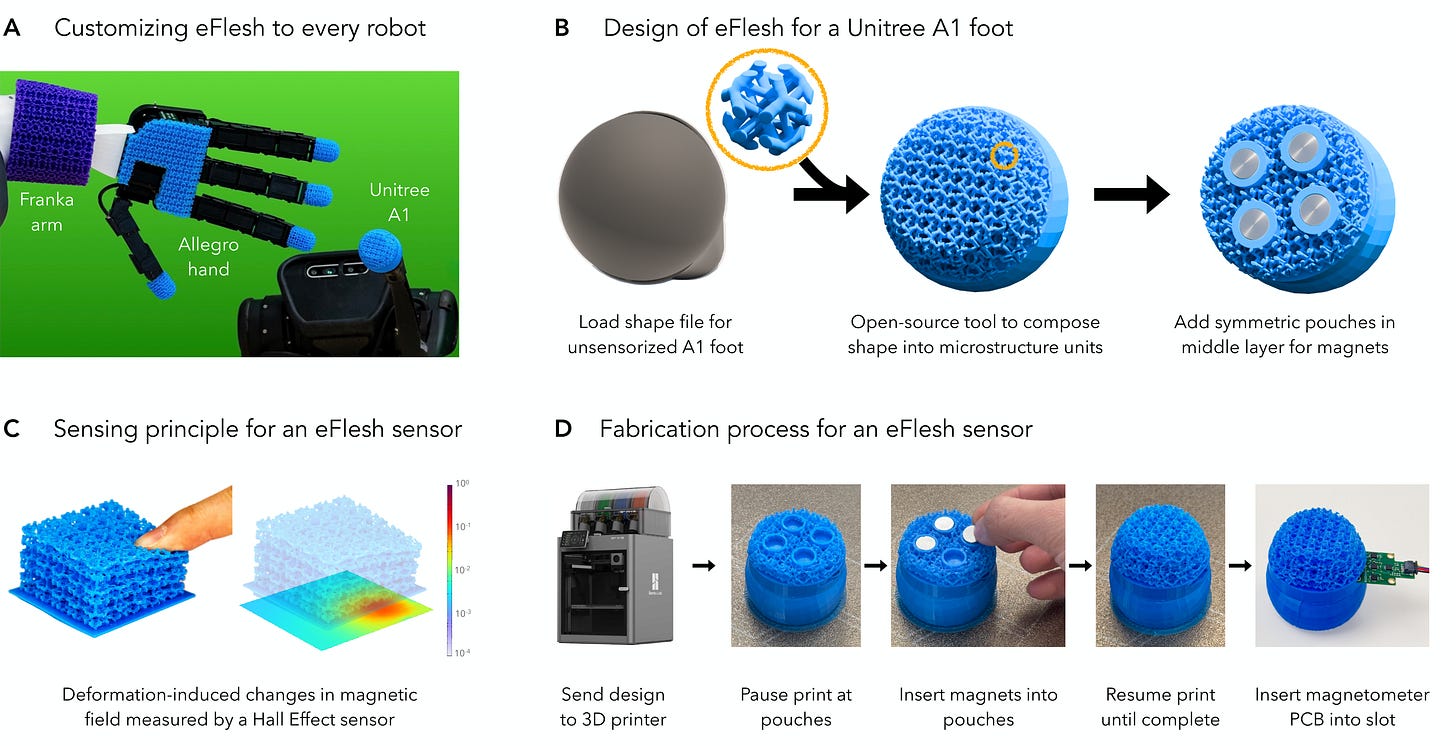

New tactile sensors like eFlesh [2] are more flexible than past designs, letting you create various shapes tailored to different robots, and they ‘re also much more affordable. With a circuit board, magnets, and a consumer 3d printer, you can build sensors which take only about a minute of active work (much more waiting for the print to finish, of course).

Similarly, work like AnySkin [3] consists of flat surfaces which can be added to many different types of robot grippers, including mounting on the same sort of Stick used in Robot Utility Models.

These use similar techniques, being magnetic-based tactile “skins,” and of course there’s a real need to interpret the results of those sensors. This is where techniques like Sparsh come in [4]: Sparsh-skin is a method from Meta designed to use self-supervised pretraining.

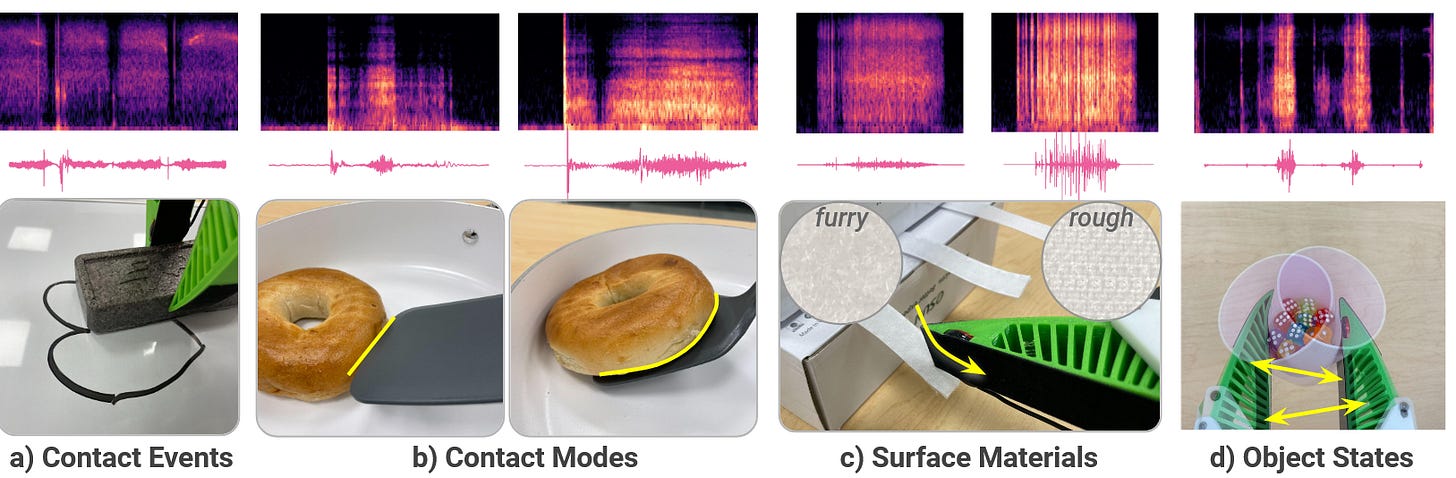

Another option — instead of these magnetic tactile skins — is just to use audio. Microphones are incredibly cheap, and available off the shelf, and they provide a very strong signal about contact. One example is ManiWAV, which used audio for a variety of contact-rich manipulation tasks [5]. Another project, SonicBoom, used a microphone array to localize contacts within 2.22 cm in novel conditions, without needing a tactile skin [6].

Scaling Tactile Data

The biggest problem facing tactile sensing, though, comes down to the Bitter Lesson. Tactile is very exciting, and helpful for many tasks… but vision data is plentiful, it’s well-understood how to simulate vision, and we can collect more visual data very easily.

Take a common master-slave robot setup like Mobile ALOHA, shown above. There are two pairs of arms: one is used to control the robot (master arms), and another is used to interact with the world and actually perform the task (slave arms). This sort of setup does not allow for any tactile feedback to the human — it allows at best coarse haptic feedback from when the arm makes contact with the world.

Here’s the important part: if the human is performing the task without any tactile feedback, the demonstrations themselves will likely not be correlated with any tactile signal. This, in turn, means that even if we add tactile sensors to the inside of the gripper, it’s unlikely that the robot will learn to really take advantage of the sensors.

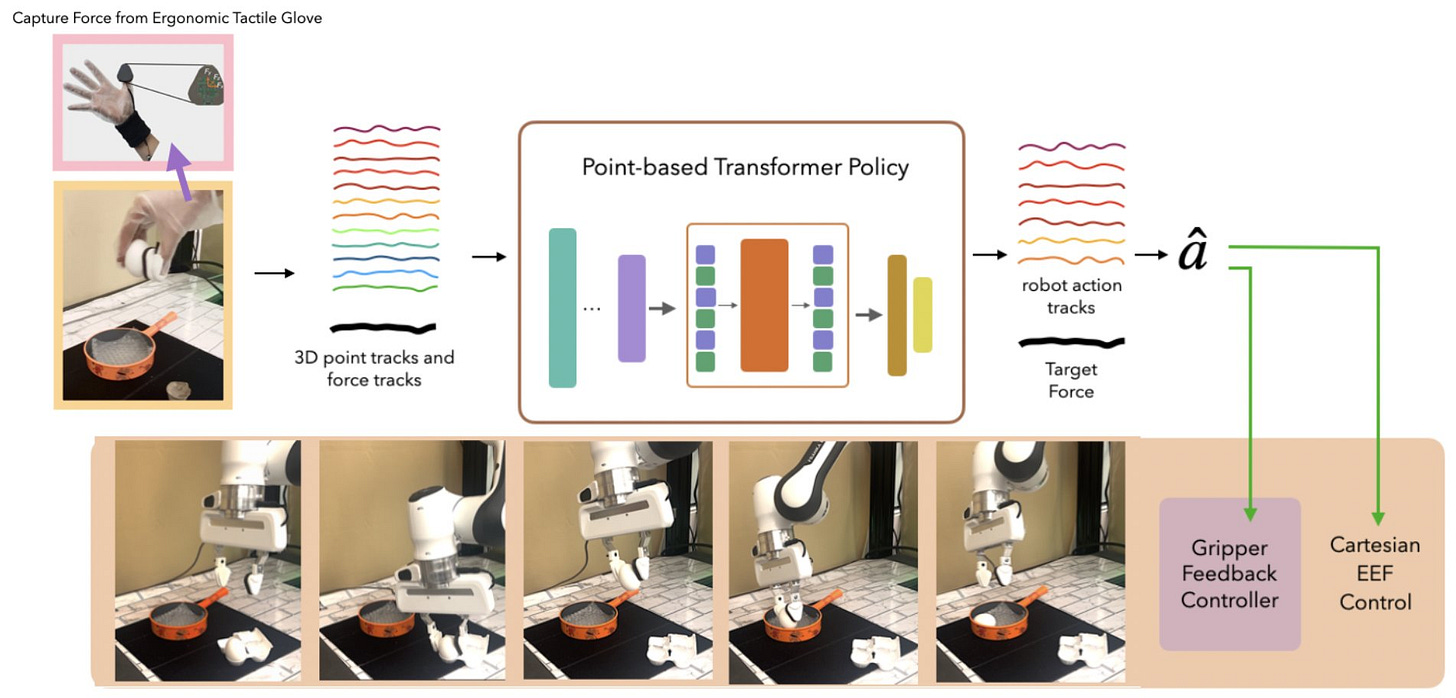

Fortunately, there are a couple ways around this. Works like Feel The Force [7] and DexUMI [8] collect richer contact data via wearable sensors. In Feel The Force, this was an AnySkin pad on the user’s finger tip; in DexUMI, the authors designed a whole wearable exoskeleton for a hand, mimicking gripper kinematics and mounting tactile sensors. These techniques also have the advantage of being dramatically faster for data collection than traditional teleoperation, because they allow the human user to act more naturally when interacting with the world.

Final Thoughts

We currently don’t use tactile for much, but my feeling is that this could change quickly. Techniques for tactile pretraining, and more accessible and flexible tactile sensing technologies, could allow for us to quickly scale tactile data in the future. The limiting factor for most robotics learning work right now is scale, and tactile data, thus far, has been very resistant to scaling.

How can we get enough data to train a robot GPT?

It’s no secret that large language models are trained on massive amounts of data - many trillions of tokens. Even the largest robot datasets are quite far from this; in a year, Physical Intelligence collected about 10,000 hours worth of robot data to train their first foundation model, PI0. This is something Andra Keay

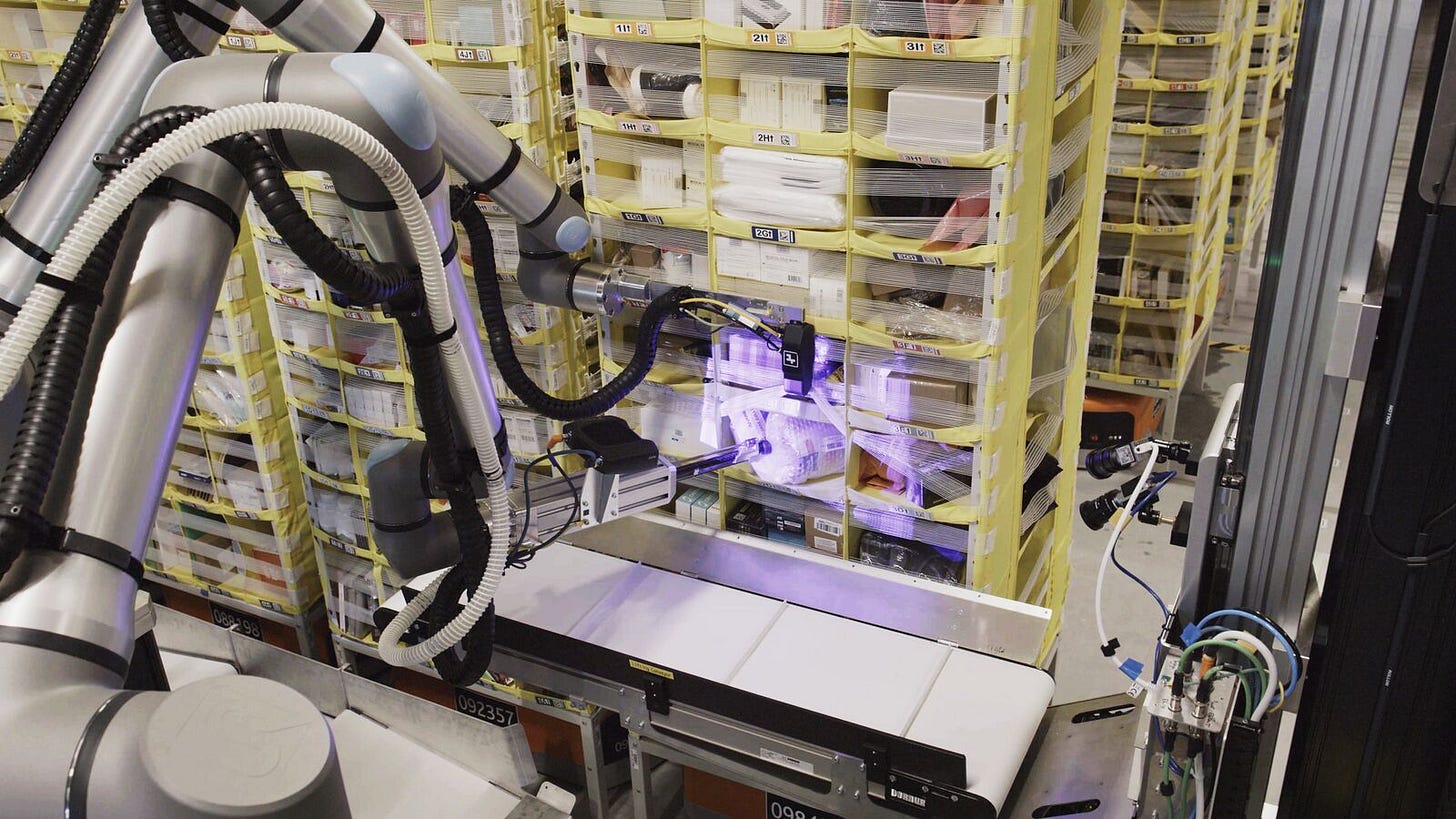

And, to go back to the Amazon Vulcan example at the beginning: contact sensing will be essential for high reliability for a wide range of tasks. Vulcan uses its contact sensing for slip detection and adaptive grip force. Unlike cameras, tactile sensors cannot be occluded. They allow for slip detection via shear force, letting the robot exert more force when necessary without crushing the objects they are holding. And we see these strengths in action in recent research like V-HOP [9], which used a combination of visual and haptic sensing to accurately track objects in hand.

Another thing I am very optimistic about is reinforcement learning, despite its limitations. I think one huge limiting factor for tactile that’s understated, is that human data collection techniques really don’t allow for good transfer or utilization of the tactile sensing. This goes away if the robot is collecting its own data!

The Limits of Reinforcement Learning

Over the last year or so, we’ve seen increasing concerns about the fact that we’re, well, running out of data to train on, something occasionally referred to as the “data wall.” One suggested solution to this is that AI systems can “generate their own …

And we don’t really need to worry so much about the limits of reinforcement learning in that case, because we’re assuming a good base policy already exists, and you can just optimize it with your new tactile sensors until it succeeds all the time.

There are a lot of things to be excited about, but also a lot of open questions still. While we have not yet figured out what the correct tactile sensor is, there is great potential in this area, and I look forward to seeing what’s next.

If you want to follow along, please consider subscribing; otherwise leave a comment below to share your thoughts!

Learn More

References

[1] Lambeta, M., Wu, T., Sengul, A., Most, V. R., Black, N., Sawyer, K., ... & Calandra, R. (2024). Digitizing touch with an artificial multimodal fingertip. arXiv preprint arXiv:2411.02479.

[2] Pattabiraman, V., Huang, Z., Panozzo, D., Zorin, D., Pinto, L., & Bhirangi, R. (2025). eFlesh: Highly customizable Magnetic Touch Sensing using Cut-Cell Microstructures. arXiv preprint arXiv:2506.09994.

[3] Bhirangi, R., Pattabiraman, V., Erciyes, E., Cao, Y., Hellebrekers, T., & Pinto, L. (2024). Anyskin: Plug-and-play skin sensing for robotic touch. arXiv preprint arXiv:2409.08276.

[4] Sharma, A., Higuera, C., Bodduluri, C. K., Liu, Z., Fan, T., Hellebrekers, T., ... & Mukadam, M. (2025). Self-supervised perception for tactile skin covered dexterous hands. arXiv preprint arXiv:2505.11420.

[5] Liu, Z., Chi, C., Cousineau, E., Kuppuswamy, N., Burchfiel, B., & Song, S. (2024, June). Maniwav: Learning robot manipulation from in-the-wild audio-visual data. In 8th Annual Conference on Robot Learning.

[6] Lee, M., Yoo, U., Oh, J., Ichnowski, J., Kantor, G., & Kroemer, O. (2024). SonicBoom: Contact Localization Using Array of Microphones. arXiv preprint arXiv:2412.09878.

[7] Adeniji, A., Chen, Z., Liu, V., Pattabiraman, V., Bhirangi, R., Haldar, S., ... & Pinto, L. (2025). Feel the Force: Contact-Driven Learning from Humans. arXiv preprint arXiv:2506.01944

[8] Xu, M., Zhang, H., Hou, Y., Xu, Z., Fan, L., Veloso, M., & Song, S. (2025). DexUMI: Using Human Hand as the Universal Manipulation Interface for Dexterous Manipulation. arXiv preprint arXiv:2505.21864.

[9] Li, H., Jia, M., Akbulut, T., Xiang, Y., Konidaris, G., & Sridhar, S. (2025). V-HOP: Visuo-Haptic 6D Object Pose Tracking. arXiv preprint arXiv:2502.17434.

Yeah as of now, visual sensors seem to be the goal, they are scalable and the data is easily interpretable. It would make sense to continue to focus of visual sensors until we figure out a way to more easily interpret the data from tactile sensors.

One might argue that IMUs are a form of touch sensing (or at least can be), as are limit switches/sensors on pneumatics or CNC operations.

As for the more human-like touch behavior, perhaps its something that will be pursued once we get closer to the limits of other sensing technologies. I.e. if improved vision sensing is easier right now, I suppose it will be pursued until there's a reason to move on.

Vulcan looks pretty rad though!