Thoughts on Figure 03

One of the world's top humanoid robot companies releases an absolutely surreal set of videos showing off their newest robot

Figure has been in the news a lot lately. So, while I have a bunch of blog posts I plan to write (about the amazing Actuate, CoRL, and Humanoids conferences, from which I am still reeling), I thought it would be best to write some quick takes on what I think of this video and their other recent releases.

First, they recently announced an absolutely insane $1 billion USD raise on a $39 billion post-money valuation (source). This puts them close in valuation to companies like Ford, which has a roughly $45 billion market cap.

If they deliver on the promises they make in these videos, it’s easy to see why.

Next, they announce Project Go-Big, their plan to follow companies like Tesla who have increasingly been moving to learn from human video for their humanoid robots. Go-Big is an attempt to collect internet-scale video data, which they can use to better train robot foundation models.

And now we have this amazing blog post on Figure 03, showing a fancy new robot with new features performing all kinds of dexterous, interactive manipulation tasks. Nothing we see in these videos seems implausible based on modern learning techniques, but it showcases what seems to be incredibly high-quality hardware and a very mature data collection and training apparatus at Figure.

Read on for more thoughts. Warning, this post is fairly raw; it’s genuinely just my unfiltered takes as I read through these blog posts, with no special information or, honestly, any editing.

First, Go Big and Egocentric Video

Learning from human video is a huge trend, thanks in no small part to Tesla’s supreme interest in the area and the fact that they are reportedly switching much to all of their internal data collection over to use it. For my perspective, you can see my post on how to get enough data to train robot GPT:

But basically, it seems like an important part of a training mixture right now, as a multiplier to limited real-world data, at least until you can truly scale up to a fleet of tens of thousands of robots doing interesting work all the time. It does not seem like a panacea for robotics’ data woes on its own, due to persistent engineering issues around getting a perfect match between a human and a robot hand, as well as the loss of tactile and other sensory information associated with a demonstration.

None of these problems are insurmountable, though, and companies like Figure, Tesla, and all their competition have very robust and mature teleop data collection operations which should help provide the necessary real-world data to “ground” human videos.

Less postive side: they’ve only shown navigation, and navigation is easy, and this isn’t honestly a great way to do it. Navigation in homes is something I’ve spent a lot of time thinking about, and I think modular/map-based solutions still will have a substantial lead when it comes to any useful product — although increasingly these maps will be built using modern deep learning, as is currently done by home robot maker Matic.

Figure 03 Hardware

Figure 03 has a few interesting traits that stood out to me:

A cloth skin, which makes it look much more natural and a bit eerie

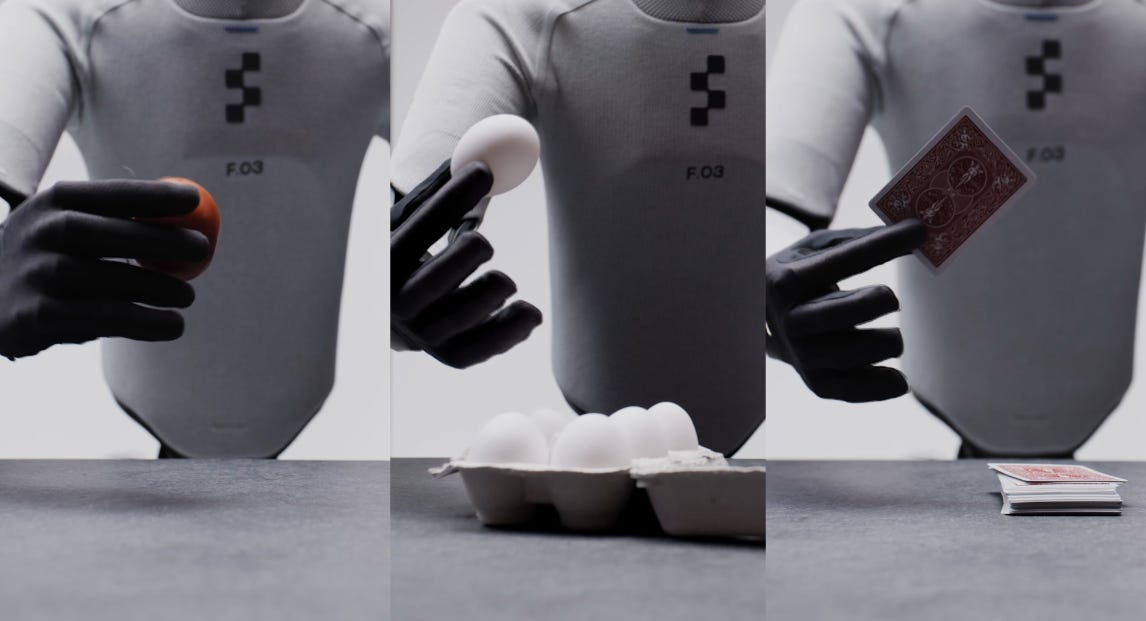

End effector cameras in the palm of the hand

A new custom, in-house built tactile sensor

Wireless inductive charging via the foot

a great design which should make reliable grasping of different objects much easier, but unfortunately does not help with placement; this means it’ll be relying purely on the head for that.

Contrast to an offset camera like you see with DexUMI or DexWild. With these, you get a much better view of the environment around the robot’s hand, even as it’s trying to grasp something. But this requires a bigger departure from the human form, and Figure has been religiously keeping their robot’s body as close to human as possible.

Also shameless plug for the RoboPapers podcast: learn more about DexWild (YouTube); learn more about DexUMI (YouTube).

On the tactile sensing front, I’m not too surprised. Tactile research has been growing in interest lately, which has resulted in a proliferation of new open-source tactile sensing designs which are lower cost, higher reliability, and smaller form-factor than bulky old-school sensors like the BioTac.

Giving Robots a Sense of Touch

While we humans are, largely, visual creatures, we can’t solely rely on our eyes to perform tasks. This is a contrast to most modern robotics AI, for which the best practice i…

The combination of tactile and end effector camera means that I think Figure’s new robot should be capable of very robust and reliable grasping — great news if you want it to handle your dishes!

Figure Use Cases

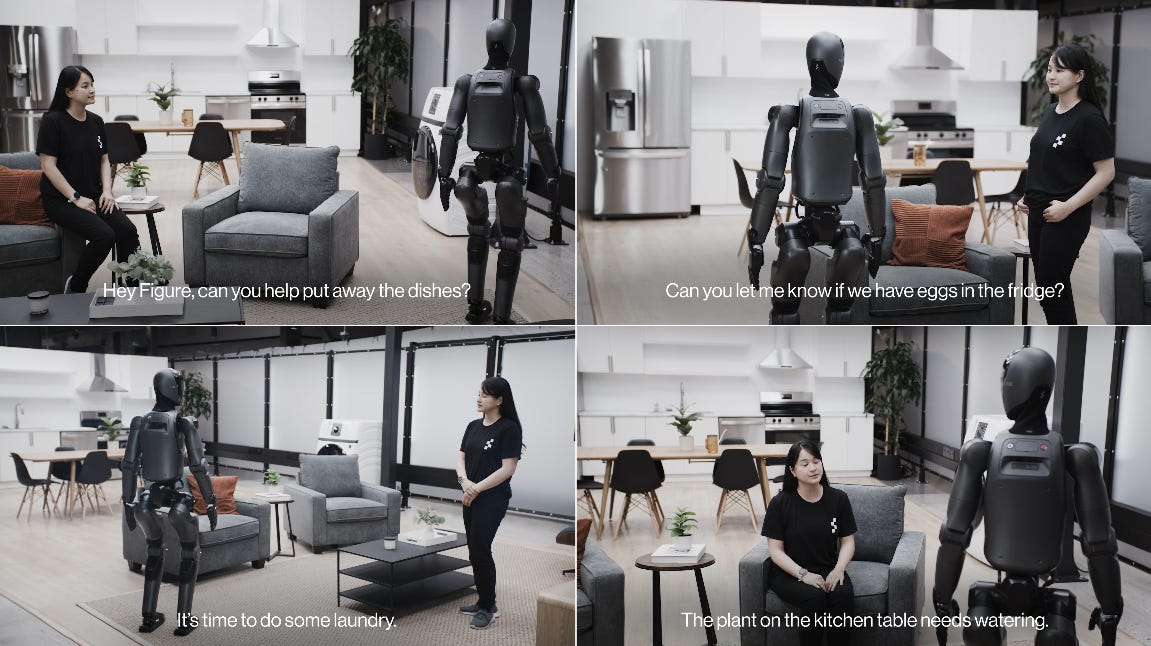

They demonstrate a few different use cases:

Receptionist (above), which I’m fairly skeptical of

Logistics: not just sorting packages but also delivering them

Home: cleanup, dishes, etc.

Missing was the impressive industrial use cases they’ve shown before:

I am not too surprised, especially given the cloth exterior and the general shift in focus. I’m not sure they’re abandoning industrial tasks, but it doesn’t seem as high priority — nor does an industrial task necessarily make sense for what Figure’s building. Other robots with radically less human form factors like those of Dexterity or Boston Dynamics might make more sense.

The home use case is incredibly exciting, though, and the speed improvements in logistics/package handling seem significant. The videos all look great, and you should check them out in the blog post I keep linking to.

What We Still Don’t See

Figure always seems to keep its leg planted when doing manipulation tasks. It seems like they’re still using a fairly standard model predictive control stack for much of their work; their walking videos have been historically blind and not too impressive compared to some others. And they never show stuff like this:

This video I’m including because it’s really, really cool looking work from Siheng Zhao at Amazon’s FAR. What’s interesting is seeing the robot use its whole body to manipulate stuff and interact with its environment; this is a huge part of the advantage of humanoids, so I hope we see more work in this direction from Figure soon.

Another thing we don’t see a lot of is Figure robots out and about in the wild. All of their demos look amazing, truly amazing — but you can see Unitree robots doing cool stuff all over the place, and you can see Tesla out and about at the Tesla Diner or at the Tron premier.

True, none of these are the kind of dexterous, contact-rich tasks that Figure is doing in their videos; Figure’s stuff is, in most ways, more impressive. But it’s so much easier to do something impressive if it’s in a controlled environment and you can re-shoot as necessary. I would love to see more videos of Figure out and about in the world, especially now that they’ve raised a massive amount of money and can potentially relax a bit more about their image.

Finally, we never see Figure robots taking any real abuse, getting shoved, tripping — dealing with all the realities a robot will need to suffer through. This is probably because their reinforcement learning control stack is still fairly new.

On Generalization

One final thing to note about videos: modern imitation learning is very, very good at overfitting. Whenever watching something like this, unless you see lots of variation — lighting, background, color — it’s hard to believe it’s generalizing a ton. Figure has shown clothes folding, then the same again with the table raised a few inches — a great sign that their policy is not overfitting just to a single manifold.

But still, a lot of their videos show stereotyped motions — always flattening a towel in the same way even when it doesn’t seem necessary, for example. Implies to me that there’s some limitations here, that generalization might not be as good as you would think.

But this is a long way from the generalization you expect to see in a home! I think there’s a long way to go and, bluntly, I think it would not work in your home today, and will not work in your home next year either. But maybe the year after that? Figure, fortunately, has the resources to last until the problem is solved.

Final Thoughts

It’s a cool robot, the demo videos are amazing, and the design decisions look good. Hope to see it out and about in the real world instead of in highly-produced videos, and I hope to see it taking a bit more abuse and using its whole body more often.

Let me know what you think below.

Neat write-up!

Re: Receptionist (above), which I’m fairly skeptical of

I’d love to hear your thoughts on why you’re skeptical about using Figure as a receptionist. Do you believe businesses might be hesitant to adopt it or do you see a technical limitation that prevents it from handling all the tasks a human receptionist would typically do?

Thanks for writing this.